“Where do I place my Google Analytic’s Code?”

Previously we talked about utilising Href Lang, this week we will be talking about the effects of the placement of your “Google Analytic’s” code.

“Understanding the best spot for implementing your Google Analytics code…”

Placement

Google Analytics works through a piece of coding which is run on JavaScript. This small snippet attached to your websites header section connects with Google, the provider of your sites valuable data. Without this tag on each and every page of your site, your data will obviously be inaccurate as not everything is tracked. There are also a number of browsers which have java disabled that will also have an effect on your data.

– A quick fix is to use Screaming Frog tool to search all your pages for any pages that are missing the code

Location

Another possibility is the location of your tracking code which can greatly affect your Analytics data. A vast majority of web developers like to place the analytics tracking code above the </body> tag so that images are loaded before the code is executed. Theoretically the page load time will decrease.

If Google analytics tracking code is placed at the end of the <body> tag, there would be a possibility of not tracking visitors that quickly click through pages before the code is executed.

– A recommended placement of analytics code would be at the top before the </head> tag.

Ahoy fellow passengers! Follow us & stay tuned next week for more information on alternative methods… and every Tuesday for more technical issues reviewed by Tug.

Why not like us on Facebook too?

“How do I target different international countries? How can I utilise Href Lang?”

Previously we talked about utilising local search, this week we will be talking about the benefits of “Href Lang”.

Rel=”alternative” and Href Lang deciphered…

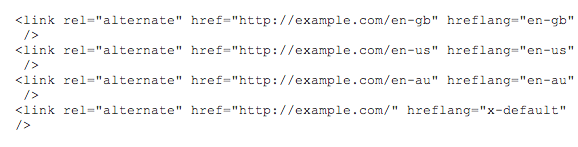

Here at Tug, we “Pull Traffic” and working with international brands we sometimes have clients asking for a solution in providing targeted pages for specific countries, such as a promotion for a certain targeted country destination, “not a problem” as we can resolve this through a method known as using the Rel=”alternative” and Href Lang attributes.

This notifies search engine robots that these two similar pages are not duplicated content but targeted content and both being just as essential to each other. The difference may only be a change of pricing, or even a change of a native language for the defined locations, but it is very important that this is taken into consideration that these attributes will need to be defined to the search engine robots.

Href lang are most commonly used when:

- You have similar content across your pages but have specific promotions and pricings to a location.

- The page has the same content but is a translated version.

To implement them into your pages you would simply use this code…

Ahoy fellow passengers! Follow us & stay tuned next week for more information on alternative methods… and every Tuesday for more technical issues reviewed by Tug.

Why not like us on Facebook too?

“How do I rank and be listed for local search and what are the benefits?”

Previously we talked about redirections, this week we will be talking about utilising local search.

Benefits of local search and why I would want to be listed…

Local search results are beneficial towards your business because you can take advantage of attracting potential customers towards your business. This is useful for physical retail businesses to target the area surrounding the business. Local search listings are on the upper hand opportunity of outranking non-local search result businesses and even business competitors.

There are a number of requirements and factors in order to hop on the local search bandwagon.

- Optimisation of your business website

- Your geographic Google places listing

- Backlinks

- Citations

To be listed, you must setup a Google+ Local page for your business. You should also list your business in a local business directory such as yelp. Another way is to get more customer reviews.

Being listed for local search will gain you more traffic as well as leads. Not only will you outrank traditional search listing competitors but you will also be deemed more relevant as a local business. Don’t underestimate the affect of appearing on local search, it will gain you much more crucial leads.

Ahoy fellow passengers! Follow us & stay tuned next week for more information on alternative methods… and every Tuesday for more technical issues reviewed by Tug.

Why not like us on Facebook too?

“What is a Robots.txt and would I need to implement it for my site?”

Last week we talked about redirections, this week we will be talking about utilising Robots.txt file.

Benefits of a Robots.txt file and what they are used for…

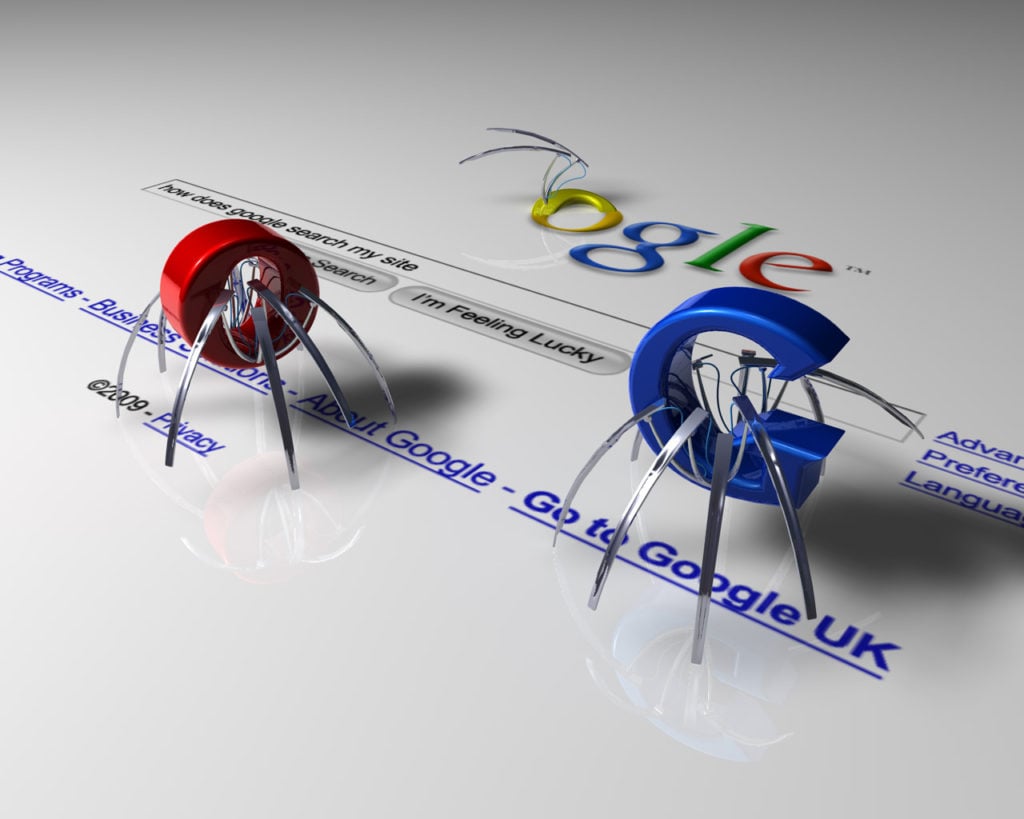

Ideally in the SEO world, we are great cooks; most ever welcoming hosts and we certainly love inviting search engine spiders to attend our site and feast on our pages. We would love them to crawl all over our pages and be impressed with what we have cooked up, so that they will eventually give us as much thumbs up as possible and list us on their billboard search engine index for our keywords.

Having a site crawled is a great opportunity to show off our site and impress these judges, but then again their might be a few places we don’t want them visiting and this is where a Robots.txt comes in place.

A Robots.txt file is a room which houses certain URLs that indicates to search engine spiders to not crawl upon. No juice or equity will be passed along when blocked using a Robots.txt.

It is not advised to use a Robots.txt for disallowing duplicate content as you can always use a “Rel Canonical” tag instead. It is also worth a mention that anything disallowed will not prevent them from showing up on search results which may lead to “suppressed listing”. This means that Google’s search engine spiders will have no access to content which are blocked using “disallow,” hence they will have no information or snippets regarding this URL. When this URL comes across a point where there is a possibility of being linked to and being displayed on Google’s Serps (Search Engine Results Pages) then this will appear to be a bad user experience. Note: disallowing a URL will not prevent it from gaining link juice & equity, but blocking this URL will suppress it and prevent this URL from passing valuable equity. As referred to previously, it is recommended to use alternative methods for duplicate content issues.

Robots.txt is a good way of letting search engine spiders know which parts of the site you want to exclude from crawl,or alternatively, will highlight to them specifically where i.e. your sitemap is located. Blocking URL’s with a robots.txt does not prevent URL’s from displaying on Serps, it prevents crawls. It is also advised to have a Robots.txt file in place in comparison to none at all.

Ahoy fellow passengers! Follow us & stay tuned next week for more information on alternative methods… and every Tuesdays for more technical issues reviewed by Tug.

Why not like us on Facebook too?

“What is a Redirect, would I need to implement Redirects for my site?”

Last week we spoke about sitemaps, this week we will be explaining about redirections.

Benefits of redirections and what redirects are for…

A redirection is a process or action of forwarding a URL into another different destination URL. For SEO’s it is a common practice to forward URL’s either for your client sites or your very own. Redirections can have a huge take on the impact of your SEO. Here is an example of the different redirections. Let’s dig deeper for the more frequent and important occurring redirects.

There are a number of reasons why redirections might occur, for example:

– A broken URL

– For UI purposes, A/B testing of a newly designed webpage

– The webpage is no longer functioning and serving its purpose

– The existing page has a newer or improved version

– Current webpage is under construction, being fixed or updated

301

301 redirect means moving the location URL to a new URL permanently. This tells search engine bots that your destination URL has changed permanently as well as the content. The link equity you have gained from the current redirected page will pass to the new URL. This eliminates losing all the credit and juice you have collected from the previous page, which is a beneficial point for SEO although it does take some time for search engine bots to revalue and gain the likes of the new page.

302

302 redirect refers to as a temporary redirect. This is similar to ‘Be Right Back’ and should be used as a temporary function, not for prolong periods of use. The existing page of a 302 redirect will retain all of its qualities, but in comparison to the destination URL none of the juicy qualities will be passed on. Extra caution should be taken on board when using 302 redirects as it can seriously damage your search engine rankings.

404

A 404 redirect is a page that is missing or no longer available. It is probably one of the worst things that can happen when browsing a site. A visitor would expect to arrive on a page full of engaging content, lovely pictures and amazing products. Soon to appear being hit on the face against a wall of a 404 page, “Ouch!”

Redirects performed correctly could make a beneficial impact and increase your search visibility. Redirecting previous sites link juice to new sites and is a positive point to mention, so don’t underestimate the worthiness of redirection on your pages. Performed well, you’ll be on the good side of search engine spiders.

Ahoy fellow passengers! Follow us & stay tuned in every Tuesday for more technical issues reviewed by Tug.

Why not like us on Facebook too?

“What is a sitemap & do I really need them for my site?”

Last week we talked about site architecture, this week we will be diving in about the importance of sitemap and indexing!

Benefits of a sitemap and what sitemaps are for…

Sitemap for short equals a site’s mapping, routing, directions… and it categorises in two forms – HTML and XML. Having a XML sitemap uploaded, alerts search engines that your website is ready for a crawl by them. The work is broken down for search engine spiders that crawl your page, making it easier for them to identify all the pages in your website. Through this they will acknowledge which pages are priority pages and to how often they are updated frequently. Let’s take a look at what the characteristics of each of them are and the importance of them.

HTML

An HTML sitemap is an actual physical page on your site that lists the pages of your website. An example, check out this sitemap. The primary intention of this sitemap is for our use and not robots. A good designed and planned site architecture shouldn’t require users to go through the sitemap in order to locate the page they are looking for. But for some instances, sitemaps in general will make a difference if you are in the midst of knowing roughly what page you are looking for and locating the page through a sitemap will eliminate having to load additional content before arriving to your desired page. If you do notice that a significant amount of traffic is coming through your implemented sitemap, majority of the time the reasons for this is that your site architecture is not working for your visitors and perhaps you will need to consider a redesign or structuring of how the content is presented.

XML

An XML sitemap is purely for search engine spiders to crawl on, allowing them to process each individual link between pages and understand the pages more, in order for your pages to index (here is an example XML Sitemap). Having an XML sitemap creates a platform for search engine spiders to understand more on how your websites architecture works for example: if you have a new page with very little to no links linking to them, or if your site has dynamic content, it will be beneficial to have those pages indexed.

The more pages a site indexes and gains the likes of search engines spiders, means in general that your site is more trusted and a worthy site that offers beneficial information. In general, the more pages indexed the better.

There are vast amounts of ways in creating an XML sitemap, here is a link which shows how to create a XML Sitemap.

Having your site crawled by search engine spiders and gaining multiple volumes of pages being indexed is a beneficial point for your whole website, don’t underestimate the value of having an XML sitemap now..

Ahoy fellow passengers! Follow us & stay tuned in every Tuesdays for more technical issues reviewed by Tug.

Why not like us on Facebook too?

“How do I perform a quick fix for my Site Architecture?”

First on deck this week we will be introducing you to 2 good friends, Xenu Sleuth & Screaming Frog!

Highlights of the advantages on how Xenu & Screaming can help your Site Architecture…

Xenu & Screaming both do a great job, both software’s will allow you to crawl your defined website and flag a lot of issues you normally wouldn’t notice on front end & they are completely free (Screaming Frog free contains restrictions).

Discover Broken Links

Like the majority of crawlers, Xenu will identify & highlight to you any broken links on your website.

– Simply click on ‘check URL’ button.

– Enter your website URL (un-tick ‘check external links’)

After Xenu has done its job, press [Ctrl]+[B] & it will showcase a list of all your broken links within your site. With this list you now can go to each URL & define whether any redirection will need to be put in place.

Level Page Structuring

A very useful function I found Xenu to be an advantage for is that it will highlight to you on the level of the URL within the structure through the ‘level’ tab. A lower level number will mean that the URL will be crawled first in comparison to a higher level number. A level 7 will mean a search engine spider such as Google Spider will have to crawl 7 levels before reaching that page. Pages within your site architecture that are top levels will have a higher priority within search engines. With your defined list by Xenu, you would understand which pages would need a change for priority & consider a change in the site architecture for your priority pages. Never hide a valued page.

Duplicate Page Titles

Xenu will highlight duplications of title page headings. Simply click ascending & it will arrange your titles together & display any titles that are duplicated. It is common for a WordPress run site to contain duplicated titles, a quick fix will allow you to identify & optimise back your titles.

Internal Linking

Another very good function is the ‘In Link’ tab which shows how many sites are linking to this URL within your own site architecture. URL’s with very few internal links will tell search engine bots that these pages are not a priority page because they have very few to none pages linking to them. Pages such as ‘disclaimer’ will be understandable, but you might want to reassess which pages are priorities, do keep in mind not to over optimise.

Identifying missing titles + Counter

Post entering your site URL, screaming will crawl along your site like Xenu & will report back to you vital information. A nifty function Screaming has is the identification of the length of your titles. A good practice is to keep all your title tags under 70 characters. Screaming will identify for you the length so you can efficiently change the titles which are overly long, to a comfortable size of 70 characters. Screaming will also identify for you any title pages that are missing their title.

H1’s

Screaming also has a tab which displays the amount of H1’s that are contained within a page, through this function you can effectively correct the H1’s & minimise them for a better SEO practice.

Ahoy fellow passengers! Stay tuned in every Tuesday for more technical issues reviewed by Tug.

Why not like us on Facebook too?